I’m at OSCON this week. Come say hi and talk Drizzle, Rackspace, cloud, photography, vegan food or brewing.

Tag Archives: drizzle

PBMS in Drizzle

Some of you may have noticed that blob streaming has been merged into the main Drizzle tree recently. There are a few hooks inside the Drizzle kernel that PBMS uses, and everything else is just in the plug in.

For those not familiar with PBMS it does two things: provide a place (not in the table) for BLOBs to be stored (locally on disk or even out to S3) and provide a HTTP interface to get and store BLOBs.

This means you can do really neat things such as have your BLOBs replicated, consistent and all those nice databasey things as well as easily access them in a scalable way (everybody knows how to cache HTTP).

This is a great addition to the AlsoSQL arsenal of Drizzle. I’m looking forward to it advancing and being adopted (now much easier that it’s in the main repository)

Drizzle @ Velocity (seemed to go well)

Monty’s talk at Velocity 2010 seemed to go down really well (at least from reading the agile admin entry on Drizzle). There are a few great bits from this article that just made me laugh:

“Oracle’s “run Java within the database†is an example of totally retarded functionality whose main job is to ruin your life”

Love it that we’re managing to get the message out.

ENUM now works properly (in Drizzle)

Over at the Drizzle blog, the recent 2010-06-07 tarball was announced. This tarball release has my fixes for the ENUM type, so that it now works as it should. I was quite amazed that such a small block of code could have so many bugs! One of the most interesting was the documented limit we inherited from MySQL (see the MySQL Docs on ENUM) of a maximum of 65,535 elements for an ENUM column.

This all started out from a quite innocent comment of Jay‘s in a code review for adding support for the ENUM data type to the embedded_innodb engine. It was all pretty innocent… saying that I should use a constant instead of the magic 0x10000 number as a limit on an assert for sanity of values getting passed to the engine. Seeing as there wasn’t a constant already in the code for that (surprise number 1), I said I’d fix it properly in a separate patch (creating a bug for it so it wouldn’t get lost) and the code went in.

So, now, a few weeks after that, I got around to dealing with that bug (because hey, this was going to be an easy fix that’ll give me a nice sense of accomplishment). A quick look in the Field_enum code raised my suspicions of bugs… I initially wondered if we’d get any error message if a StorageEngine returned a table definition that had too many ENUM elements (for example, 70,000). So, I added a table to the tableprototester plugin (a simple dummy engine that is loaded for testing the parsing of specially constructed table messages) that had 70,000 elements for a single ENUM column. It didn’t throw an error. Darn. It did, however, have an incredibly large result for SHOW CREATE TABLE.

Often with bugs like this I may try to see if the problem is something inherited from MySQL. I’ll often file a bug with MySQL as well if that’s the case. If I can, I’ll sometimes attach the associated patch from Drizzle that fixes the bug, sometimes with a patch directly for and tested on MySQL (if it’s not going to take me too long). If these patches are ever applied is a whole other thing – and sometimes you get things like “each engine is meant to have auto_increment behave differently!” – which doesn’t inspire confidence.

But anyway, the MySQL limit is somewhere between 10850 and 10900. This is not at all what’s documented. I’ve filed the appropriate bug (Bug #54194) with reproducible test case and the bit of problematic code. It turns out that this is (yet another) limit of the FRM file. The limit is “about 64k FRM”. The bit of code in MySQL that was doing the checking for the ENUM limit was this:

/* Hack to avoid bugs with small static rows in MySQL */

reclength=max(file->min_record_length(table_options),reclength);

if (info_length+(ulong) create_fields.elements*FCOMP+288+

n_length+int_length+com_length > 65535L || int_count > 255)

{

my_message(ER_TOO_MANY_FIELDS, ER(ER_TOO_MANY_FIELDS), MYF(0));

DBUG_RETURN(1);

}

So it’s no surprise to anyone how this specific limit (the number of elements in an ENUM) got missed when I converted Drizzle from using an FRM over to a protobuf based structure.

So a bunch of other cleanup later, a whole lot of extra testing and I can pretty confidently state that the ENUM type in Drizzle does work exactly how you think it would.

Either way, if you’re getting anywhere near 10,000 choices for an ENUM column you have no doubt already lost.

New CREATE TABLE performance record!

4 min 20 sec

So next time somebody complains about NDB taking a long time in CREATE TABLE, you’re welcome to point them to this :)

- A single CREATE TABLE statement

- It had ONE column

- It was an ENUM column.

- With 70,000 possible values.

- It was 605kb of SQL.

- It ran on Drizzle

This was to test if you could create an ENUM column with greater than 216 possible values (you’re not supposed to be able to) – bug 589031 has been filed.

How does it compare to MySQL? Well… there are other problems (Bug 54194 – ENUM limit of 65535 elements isn’t true filed). Since we don’t have any limitations in Drizzle due to the FRM file format, we actually get to execute the CREATE TABLE statement.

Still, why did this take four and a half minutes? I luckily managed to run poor man’s profiler during query execution. I very easily found out that I had this thread constantly running check_duplicates_in_interval(), which does a stupid linear search for duplicates. It turns out, that for 70,000 items, this takes approximately four minutes and 19.5 seconds. Bug 589055 CREATE TABLE with ENUM fields with large elements takes forever (where forever is defined as a bit over four minutes) filed.

So I replaced check_duplicates_in_interval() with a implementation using a hash table (boost::unordered_set actually) as I wasn’t quite immediately in the mood for ripping out all of TYPELIB from the server. I can now run the CREATE TABLE statement in less than half a second.

So now, I can run my test case in much less time and indeed check for correct behaviour rather quickly.

I do have an urge to find out how big I can get a valid table definition file to though…. should be over 32MB…

BLOBS in the Drizzle/MySQL Storage Engine API

Another (AFAIK) undocumented part of the Storage Engine API:

We all know what a normal row looks like in Drizzle/MySQL row format (a NULL bitmap and then column data):

Nothing that special. It’s a fixed sized buffer, Field objects reference into it, you read out of it and write the values into your engine. However, when you get to BLOBs, we can’t use a fixed sized buffer as BLOBs may be quite large. So, the format with BLOBS is the bit in the row is a length of the blob (1, 2, 3 or 4 bytes – in Drizzle it’s only 3 or 4 bytes now and soon only 4 bytes once we fix a bug that isn’t interesting to discuss here). The Second part of the in-row part is a pointer to a location in memory where the BLOB is stored. So a row that has a BLOB in it looks something like this:

The size of the pointer is (of course) platform dependent. On 32bit machines it’s 4 bytes and on 64bit machines it’s 8 bytes.

Now, if I were any other source of documentation, I’d stop right here.

But I’m not. I’m a programmer writing a Storage Engine who now has the crucial question of memory management.

When your engine is given the row from the upper layer (such as doInsertRecord()/write_row()) you don’t have to worry, for the duration of the call, the memory will be there (don’t count on it being there after though, so if you’re not going to immediately splat it somewhere, make your own copy).

For reading, you are expected to provide a pointer to a location in memory that is valid until the next call to your Cursor. For example, rnd_next() call reads a BLOB field and your engine provides a pointer. At the subsequent rnd_next() call, it can free that pointer (or at doStopTableScan()/rnd_end()).

HOWEVER, this is true except for index_read_idx_map(), which in the default implementation in the Cursor (handler) base class ends up doing a doStartIndexScan(), index_read(), doEndIndexScan(). This means that if a BLOB was read, the engine could have (quite rightly) freed that memory already. In this case, you must keep the memory around until either a reset() or extra(HA_EXTRA_FLUSH) call.

This exception is tested (by accident) by a whole single query in type_blob.test – a monster of a query that’s about a seven way join with a group by and an order by. It would be quite possible to write a fairly functional engine and completely miss this.

Good luck.

This blog post (but not the whole blog) is published under the Creative Commons Attribution-Share Alike License. Attribution is by linking back to this post and mentioning my name (Stewart Smith).

Using the row buffer in Drizzle (and MySQL)

Here’s another bit of the API you may need to use in your storage engine (it also seems to be a rather unknown. I believe the only place where this has really been documented is ha_ndbcluster.cc, so here goes….

Drizzle (through inheritance from MySQL) has its own (in memory) row format (it could be said that it has several, but we’ll ignore that for the moment for sanity). This is used inside the server for a number of things. When writing a Storage Engine all you really need to know is that you’re expected to write these into your engine and return them from your engine.

The row buffer format itself is kind-of documented (in that it’s mentioned in the MySQL Internals documentation) but everywhere that’s ever pointed to makes the (big) assumption that you’re going to be implementing an engine that just uses a more compact variant of the in-memory row format. The notable exception is the CSV engine, which only ever cares about textual representations of data (calling val_str() on a Field is pretty simple).

The basic layout is a NULL bitmap plus the data for each non-null column:

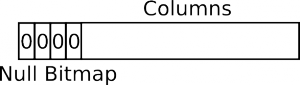

Except that the NULL bitmap is byte aligned. So in the above diagram, with four nullable columns, it would actually be padded out to 1 byte:

Except that the NULL bitmap is byte aligned. So in the above diagram, with four nullable columns, it would actually be padded out to 1 byte:

Each column is stored in a type-specific way.

Each Table (an instance of an open table which a Cursor is used to iterate over parts of) has two row buffers in it: record[0] and record[1]. For the most part, the Cursor implementation for your Storage Engine only ever has to deal with record[0]. However, sometimes you may be asked to read a row into record[1], so your engine must deal with that too.

A Row (no, there’s no object for that… you just get a pointer to somewhere in memory) is made up of Fields (as in Field objects). It’s really made up of lots of things, but if you’re dealing with the row format, a row is made up of fields. The Field objects let you get the value out of a row in a number of ways. For an integer column, you can call Field::val_int() to get the value as an integer, or you can call val_str() to get it as a string (this is what the CSV engine does, just calls val_str() on each Field).

The Field objects are not part of a row in any way. They instead have a pointer to record[0] stored in them. This doesn’t help you if you need to access record[1] (because that can be passed into your Cursor methods). Although the buffer passed into various Cursor methods is usually record[0] it is not always record[0]. How do you use the Field objects to access fields in the row buffer then? The answer is the Field::move_field_offset(ptrdiff_t) method. Here is how you can use it in your code:

ptrdiff_t row_offset= buf - table->record[0];(**field).move_field_offset(row_offset);(do things with field)(**field).move_field_offset(-row_offset);

Yes, this API completely sucks and is very easy to misuse and abuse – especially in error handling cases. We’re currently discussing some alternatives for Drizzle.

This blog post (but not the whole blog) is published under the Creative Commons Attribution-Share Alike License. Attribution is by linking back to this post and mentioning my name (Stewart Smith).

HailDB, Hudson, compiler warnings and cppcheck

I’ve integrated HailDB into our Hudson setup (haildb-trunk on Hudson). I’ve also made sure that Hudson is tracking the compiler warnings. We’ve enabled more compiler warnings than InnoDB has traditionally been compiled with – this means we’ve started off with over 4,300 compiler warnings! Most of those are not going to be anything remotely harmful – however, we often find that it’s 1 in 1000 that is a real bug. I’ve managed to get it down to about 1,700 at the moment (removing a lot of harmless ones).

I’ve also enabled a cppcheck run on it. Cppcheck is a static analysis tool for C/C++. We’ve also enabled it for Drizzle (see drizzle-build-cppcheck on Hudson). When we enabled it for Drizzle, we immediately found three real bugs! There is also a coding style checker which we’ve also enabled on both projects. So far, cppcheck has not found any real bugs in HailDB, just some style warnings.

So, I encourage you to try cppcheck if you’re writing C/C++.

The rotating blades database benchmark

(and before you ask, yes “rotating blades” comes from “become a fan”)

I’m forming the ideas here first and then we can go and implement it. Feedback is much appreciated.

Two tables.

Table one looks like this:

CREATE TABLE fan_of (

user_id BIGINT,

item_id BIGINT,

PRIMARY KEY (user_id, item_id),

INDEX (item_id)

);

That is, two columns, both 64bit integers. The primary key covers both columns (a user cannot be a fan of something more than once) and can be used to look up all things the user is a fan of. There is also an index over item_id so that you can find out which users are a fan of an item.

The second table looks like this:

CREATE TABLE fan_count (

item_id BIGINT PRIMARY KEY,

fans BIGINT

);

Both tables start empty.

You will have 1000, 2000,4000 and 8000 concurrent clients attempting to run the queries. These concurrent clients must behave as if they could be coming from a web server. The spirit of the benchmark is to have 8000 threads (or processes) talk to the database server independent of each other.

The following set of queries will be run a total of 23,000,000 (twenty three million) times. The my_user_id below is an incrementing ID per connection allocated by partitioning 23,000,000 evenly between all the concurrent clients (e.g. for 1000 connections each connection gets 23,000 sequential ids)

You must run the following queries.

- How many fans are there of item 12345678 (e.g. SELECT fans FROM fan_count WHERE item_id=12345678)

- Is my_user_id already a fan of item 12345678 (e.g. SELECT user_id FROM fan_of WHERE user_id=my_user_id AND item_id=12345678)

- The next two queries MUST be in the same transaction:

- my_user_id becomes a fan of item 12345678 (e.g. INSERT INTO fans (user_id,item_id) values (my_user_id, 12345678))

- increment count of fans (e.g. UPDATE fan_count SET fans=fans+1 WHERE item_id=12345678)

For the first query you are allowed to use a caching layer (such as memcached) but the expiry time must be 5 seconds or less.

You do not have to use SQL. You must however obey the transaction boundary above. The insert and the update must be part of the same transaction.

Results should include: min, avg, max response time for each query as well as the total time to execute the benchmark.

Data must be durable to a machine being switched off and must still be available with that machine switched off. If committing to local disk, you must also replicate to another machine. If running asynchronous replication, the clock does not stop until all changes have been applied on the slave. If doing asynchronous replication, you must also record the replication delay throughout the entire test.

In the event of timeout or deadlock in doing the insert and update part, you must go back to the first query (how many fans) and retry. Having to retry does not count towards the 23,000,000 runs.

At the end of the benchmark, the query SELECT fans FROM fan_count WHERE item_id=12345678 should return 23,000,000.

Yes, this is a very evil benchmark. It seems to be a bit indicative about the kind of peak load that can be experienced by a bunch of Web 2.0 sites that have a “like” or “become a fan” style buttons. I fully expect the following:

- Pretty much all systems will nosedive in performance after 1000 concurrent clients

- Transaction rollbacks due to deadlock detection or lock wait timeouts will be a lot.

- Many existing systems and setups not complete it in reasonable time.

- A solution using Scale Stack to be an early winner (backed by MySQL or Drizzle)

- Somebody influenced by Domas turning InnoDB deadlock detection off very quickly.

- Somebody to call this benchmark “stupid” (that person will have a system that fails dismally at this benchmark)

- Somebody who actually has any knowledge of modern large scale web apps to suggest improvements

- Nobody even attempting to benchmark the Oracle database

- Somebody submitting results with MySQL to not wait until the replication stream has finished applying.

- Some NoSQL systems to suck considerably more than their SQL counterparts.

Storage Engine API: write_row, CREATE SELECT and DDL

(this probably applies exactly the same for MySQL and Drizzle… but I’m just speaking about current Drizzle here)

In my current merge request for the embedded-innodb-create-select-transaction-arrgh branch (also see this specific revision), you’ll notice an odd hoop that we have to jump through to make CREATE SELECT statements work with an engine such as InnoDB.

Basically, this is what happens:

- start transaction

- start executing SELECT QUERY (well, prepare executing it and fetch a row)

- create table

- attempt to insert into table

But… we have to do the DDL statement (i.e. the CREATE TABLE) in its own transaction. This means that the outer transaction (running the SELECT) shouldn’t be able to see it. Except it does. We can create a cursor on this table. However, when we try and do something with it (e.g. ib_cursor_first()) we then get the error message DB_MISSING_HISTORY from InnoDB. With a data dictionary that was REPEATABLE READ, we shouldn’t have this problem. However, we don’t have that.

So? What do we do? If we’re in ::write_row and we get an error and we’re running a SQLCOM_CREATE_TABLE sql_command (yes, we get to poke into current_session->lex->sql_command to find this out) we just magically restart the transaction so that we can (properly) see the created table and write rows to it.

This is not a sane part of the interface; it won’t be an issue for many engines but it is needed here.

This blog post (but not the whole blog) is published under the Creative Commons Attribution-Share Alike License. Attribution is by linking back to this post and mentioning my name (Stewart Smith).

Interesting Videos from the MySQL Conference and Expo

There’s a good number of videos appearing online from the MySQL Conference and Expo that was on last week.

Here’s a short list of interesting things to look at if you weren’t able to make the sessions. Obviously, this is from my view as a Drizzle developer. There were other interesting things, but this list is more focused towards where my Drizzle brain is stimulated.

Drizzle Developer Day is TODAY!

http://drizzle.org/wiki/Drizzle_Developer_Day_2010

Upstairs in the Hyatt right near the Speaker room (down the hallway on the left from the main conference registration desk).

See you here!

Announcing HailDB

I just announced our continuation of the Embedded InnoDB project under the name of HailDB. Check out the announcement over at http://www.haildb.com/.

HailDB is a relational database that is embeddable within applications. You embed HailDB by linking to a shared library and calling a clean and simple API. HailDB is a continuation of the Embedded InnoDB project. It is not itself a database server, but is a library implementing the storage layer. With the addition of the HailDB plugin to Drizzle you get a full SQL interface.

Read more at http://www.haildb.com

Embedded InnoDB is in the tree!

Well… the start of it :)

I’ve taken the approach of taking tiny incremental steps (and getting review for each step) in implementing a Storage Engine based on the Embedded InnoDB library. What hit lp:drizzle (the trunk branch, for the 2010-04-07 milestone tarball) is only a handful of these small steps, so this engine is not remotely ready for end users.

There should be more of my Embedded InnoDB work hitting the tree in the upcoming days/weeks, enough to get it to a satte that one could describe as functional :)

AlsoSQL

So there’s a bit of a swelling around the idea of NoSQL. That is, databases that don’t have an SQL interface in front of them – with the promise of better performance. With a well designed backend, this is no doubt the case.

A flexible query language is rather useful though. I think we’ll see the rise of AlsoSQL. That is systems that present a fast and simple protocol along with a SQL interface.

This hybrid system has seen use for many years. MySQL Cluster is one such example. SQL through MySQL Server, NoSQL through NDB API.

With Drizzle, I feel we’ll be in a pretty good position to offer non-sql based protocols and access methods to existing storage engines.

The Drizzle (and MySQL) Key tuple format

Here’s something that’s not really documented anywhere (unless you count ha_innodb.cc as a source of server documentation). You may have some idea about the MySQL/Drizzle row buffer format. This is passed around the storage engine interface: in for write_row and update_row and out for the various scan and index read methods.

If you want to see the docs for it that exist in the code, check out store_key_val_for_row in ha_innodb.cc.

However, there is another format that is passed to your engine (and that your engine is expected to understand) and for lack of a better name, I’m going to call it the key tuple format. The first place you’ll probably see this is when implementing the index_read function for a Cursor (or handler in MySQL speak).

You get two things: a pointer to the buffer and the length of the buffer. Since a key can be made up of multiple parts, some of which can be NULL and some of which can be of variable length, this buffer is not (usually) a simple value. If you are starting out in your engine development, you can use this buffer blindly as a single value for non-nullable indexes with only 1 column.

The basic format is this:

- The buffer is in-order of the index. First column in the index is first in the buffer, second second etc.

- The buffer must be zero-filled. The server kernel will use memcmp to compare two key values.

- If the column is NULLable, then the first byte is set to 1 if the column is null. Else, 0 means not-null.

- From ha_innodb.cc (for BLOBs, which I haven’t put in embedded_innodb yet): If the column is of a BLOB type (it must be a column prefix field in this case), then we put the length of the data in the field to the next 2 bytes, in the little-endian format. If the field is SQL NULL, then these 2 bytes are set to 0. Note that the length of data in the field is <= column prefix length.

- For fixed length fields (such as int), the next max field length bytes are for that field.

- For VARCHAR, there is always a 2 byte (in little endian) length. This is different to the row format, which may have 1 or 2 bytes. In the key tuple format it is ALWAYS two bytes.

I’ll discuss the use of this for rnd_pos() and position() in a later post…

This blog post (but not the whole blog) is published under the Creative Commons Attribution-Share Alike License. Attribution is by linking back to this post and mentioning my name (Stewart Smith).

on TableIdentifier (and the death of path as a parameter to StorageEngines)

As anybody who has ever implemented a Storage Engine for MySQL will know, a bunch of the DDL calls got passed a parameter named “path”. This was a filesystem path. Depending on what platform you were running, it may contain / or \ (and no, it’s not consistent on each platform). Add to that the difference if you were creating temporary tables (table name of #sql_somethingsomething) and the difference if you were one of the two (built in) engines that were able to be used for creating internal temporary tables (temp tables that are created during query execution that do not belong in a schema). Well… you had a bit of a mess.

My earlier attempts involved splitting everything up into two strings: schema name and table name. This ended badly. The final architecture we decided on was to have an object passed around that would deal with various transformations (from what the user entered to what we can store on file systems, or to what temporary table maps to what unique name). This is TableIdentifier.

Brian has been introducing it around the code for a while now, and we just got it to now most of the places where table names are passed to Storage Engines. This means that if you’re writing a Storage Engine that doesn’t just blindly store things in files, you can sensibly use the getSchemaName() and getTableName() methods to call your API.

One last bit of evil….

drizzle> select libtcc("#include <string.h>\n#include <stdlib.h>\nint foo(char* s) { char *a= malloc(1000); return snprintf(s, 100, \"%p\", a); }") as RESULT;+-----------+ | RESULT | +-----------+ | 0x199c610 | +-----------+ 1 row in set (0 sec)

drizzle> select libtcc("#include <string.h>\n#include <stdlib.h>\nint foo(char* s) { char *a= 0x199c610; strcpy(a, \"Hello World!\"); strcpy(s,\"done\"); return strlen(s); }") as result;+--------+ | result | +--------+ | done | +--------+ 1 row in set (0.01 sec)

drizzle> select libtcc("#include <string.h>\n#include <stdlib.h>\nint foo(char* s) { char *a= 0x199c610; strcpy(s, a); return strlen(s); }") as result;+--------------+ | result | +--------------+ | Hello World! | +--------------+ 1 row in set (0.01 sec)

drizzle> select libtcc("#include <string.h>\n#include <stdlib.h>\nint foo(char* s) { char *a= 0x19a9bc0; free(a); strcpy(s,\"done\"); return strlen(s); }") as result;+--------+ | result | +--------+ | done | +--------+ 1 row in set (0 sec)

A MD5 stored procedure for Drizzle… in C

So, just in case that wasn’t evil enough for you… perhaps you have something you want to know the MD5 checksum of. So, you could just do this:

drizzle> select md5('Hello World!');

+----------------------------------+

| md5('Hello World!') |

+----------------------------------+

| ed076287532e86365e841e92bfc50d8c |

+----------------------------------+

1 row in set (0 sec)

But that is soooo boring.

Since we have the SSL libs already loaded into Drizzle, and using my very evil libtcc plugin… we could just implement it in C. We can even use malloc!

drizzle> SELECT LIBTCC("#include <string.h>\n#include <stdlib.h>\n#include <openssl/md5.h>\nint foo(char* s) { char *a = malloc(100); MD5_CTX context; unsigned char digest[16]; MD5_Init(&context); strcpy(a,\"Hello World!\"); MD5_Update(&context, a, strlen(a)); MD5_Final(digest, &context); snprintf(s, 33, \"%02x%02x%02x%02x%02x%02x%02x%02x%02x%02x%02x%02x%02x%02x%02x%02x\", digest[0], digest[1], digest[2], digest[3],digest[4], digest[5], digest[6], digest[7],digest[8], digest[9], digest[10], digest[11],digest[12], digest[13], digest[14], digest[15]); free(a); return 32; }") AS RESULT;

+----------------------------------+ | RESULT | +----------------------------------+ | ed076287532e86365e841e92bfc50d8c | +----------------------------------+ 1 row in set (0.01 sec)

Currently the parameter is static in the C version due to me not having… well.. done a good job implementing the calling of C code.