Here’s another bit of the API you may need to use in your storage engine (it also seems to be a rather unknown. I believe the only place where this has really been documented is ha_ndbcluster.cc, so here goes….

Drizzle (through inheritance from MySQL) has its own (in memory) row format (it could be said that it has several, but we’ll ignore that for the moment for sanity). This is used inside the server for a number of things. When writing a Storage Engine all you really need to know is that you’re expected to write these into your engine and return them from your engine.

The row buffer format itself is kind-of documented (in that it’s mentioned in the MySQL Internals documentation) but everywhere that’s ever pointed to makes the (big) assumption that you’re going to be implementing an engine that just uses a more compact variant of the in-memory row format. The notable exception is the CSV engine, which only ever cares about textual representations of data (calling val_str() on a Field is pretty simple).

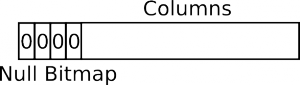

The basic layout is a NULL bitmap plus the data for each non-null column:

Except that the NULL bitmap is byte aligned. So in the above diagram, with four nullable columns, it would actually be padded out to 1 byte:

Except that the NULL bitmap is byte aligned. So in the above diagram, with four nullable columns, it would actually be padded out to 1 byte:

Each column is stored in a type-specific way.

Each Table (an instance of an open table which a Cursor is used to iterate over parts of) has two row buffers in it: record[0] and record[1]. For the most part, the Cursor implementation for your Storage Engine only ever has to deal with record[0]. However, sometimes you may be asked to read a row into record[1], so your engine must deal with that too.

A Row (no, there’s no object for that… you just get a pointer to somewhere in memory) is made up of Fields (as in Field objects). It’s really made up of lots of things, but if you’re dealing with the row format, a row is made up of fields. The Field objects let you get the value out of a row in a number of ways. For an integer column, you can call Field::val_int() to get the value as an integer, or you can call val_str() to get it as a string (this is what the CSV engine does, just calls val_str() on each Field).

The Field objects are not part of a row in any way. They instead have a pointer to record[0] stored in them. This doesn’t help you if you need to access record[1] (because that can be passed into your Cursor methods). Although the buffer passed into various Cursor methods is usually record[0] it is not always record[0]. How do you use the Field objects to access fields in the row buffer then? The answer is the Field::move_field_offset(ptrdiff_t) method. Here is how you can use it in your code:

ptrdiff_t row_offset= buf - table->record[0];

(**field).move_field_offset(row_offset);

(do things with field)

(**field).move_field_offset(-row_offset);

Yes, this API completely sucks and is very easy to misuse and abuse – especially in error handling cases. We’re currently discussing some alternatives for Drizzle.

This blog post (but not the whole blog) is published under the Creative Commons Attribution-Share Alike License. Attribution is by linking back to this post and mentioning my name (Stewart Smith).

Like this:

Like Loading...